1.3. Job Scheduling -- LSF

The EDA platform uses IBM Platform LSF for job scheduling management.

Platform Queues

Currently, the platform mainly configures the following queues:

cpu: A queue consisting of 10 CPU nodes.bmcpu: A queue consisting of 10 BMCPU nodes.gpu: A queue consisting of 4 GPU nodes.interactive: An interactive task queue.normal: A general queue, jobs are submitted to this queue if no specific queue is designated.

Common Operations:

1. Submitting Jobs with bsub:

bsub -o output.log -e error.log echo hello-world

-o output.log: Redirects the standard output of the job to output.log

-e error.log: Redirects the error output of the job to error.log

bsub -Is myjob.sh

-Is: Submits a job in interactive mode, allowing user interaction with the job

bsub -q [cpu | bmcpu | gpu] -m cpuXX

-q cpu/bmcpu/gpu: Specifies which queue the job should be submitted to

cpu queue: 10 CPU nodes, cpu01 ~ cpu10. Hardware configuration per node: 32core * 2 @2.0GHz, 1T RAM

bmcpu queue: 10 BMCPU nodes, bmcpu01 ~ bmcpu10. Hardware configuration per node: 32core * 2 @2.0GHz, 2T RAM

gpu queue: 4 GPU nodes, gpu01 ~ gpu04. Hardware configuration per node: 28core * 2 @2.6GHz, 512G RAM, A30 * 8

-m gpu01: Specifies that the job runs on node gpu01. Typically used when running Docker jobs to avoid pulling images from the local repository each time.

bsub -n 8 -gpu "num=2"

-n 8: Requests 8 cores

-gpu "num=2": Requests 2 A30 GPUs. Must be used in conjunction with "-q gpu" or "-m gpu0X".

2. Check Job Status and Force Kill Jobs

bjobs & bkill $job-id

[simonyjhe@manager01 ~]$ bsub -q cpu sleep 180 &

[1] 10190

[simonyjhe@manager01 ~]$ Job <10190> is submitted to queue <cpu>.

[1]+ Done bsub -q cpu sleep 180

[simonyjhe@manager01 ~]$ bjobs

JOBID USER STAT QUEUE FROM_HOST EXEC_HOST JOB_NAME SUBMIT_TIME

10190 simonyj RUN normal manager01 cpu02 sleep 180 Dec 1 01:08

[simonyjhe@manager01 ~]$ bkill 10190

Job <10190> is being terminated

[simonyjhe@manager01 ~]$ bjobs

No unfinished job found

[simonyjhe@manager01 ~]$

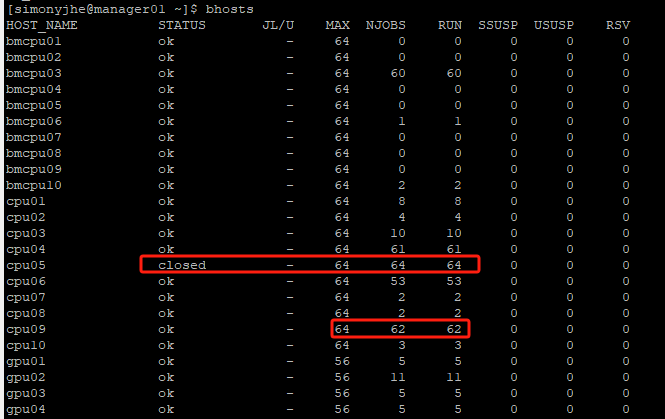

3. Check Node Load: bhosts

4. Job Submission Template:

#!/bin/sh

#BSUB -R "select[gpu_musei<Used_memory] (i, gpu_id; used_memory:24576-Required_mem(MiB)"

#BSUB -m "gpu01" # (CAN BE "cpu0x, gpu0x, bmcpu0x", use bhosts for detail)

#BSUB -J YOUR_JOB_NAME

#BSUB -n JOB_NUM(upper limit: 16/user)

#BSUB -gpu "num=NUM"

#BSUB -o YOUR_OUTPUT_FILE

#BSUB -e YOUR_ERROR_FILE

#BSUB -W RUN_TIME_LIMIT

module load anaconda3

module load YOUR_CUDA_VERSION #(ps: check the available packages by "module avail")

source activate YOUR_PYTHON_ENV YOUR_COMMAND