12.10 Introduction to Mindformers

The goal of MindSpore Transformers is to build a full-flow development suite for large model training, fine-tuning, evaluation, inference, and deployment: providing the industry's mainstream Transformer class of pre-training models and SOTA downstream task applications, covering a wealth of parallel features. It is expected to help users easily realize large model training and innovative research and development. MindSpore Transformers Suite is based on MindSpore's built-in parallel technology and componentized design, with the following features:

● Seamlessly switch from single card to large-scale cluster training with a single line of code;

● Provides flexible and easy-to-use personalized parallel configuration;

●Ability to automatically perform topology sensing and efficiently fuse data parallelism and model parallelism strategies;

● One-click initiation of single/multi-card training, fine-tuning, evaluation, and inference processes for any task;

●Support users to perform componentized configuration of any module, such as optimizer, learning strategy, network assembly, etc.;

● Provide high-level ease-of-use interfaces such as Trainer, pipeline, and AutoClass;

●Provide automatic download and loading of preset SOTA weights;

● Support seamless migration and deployment of AI computing centers;

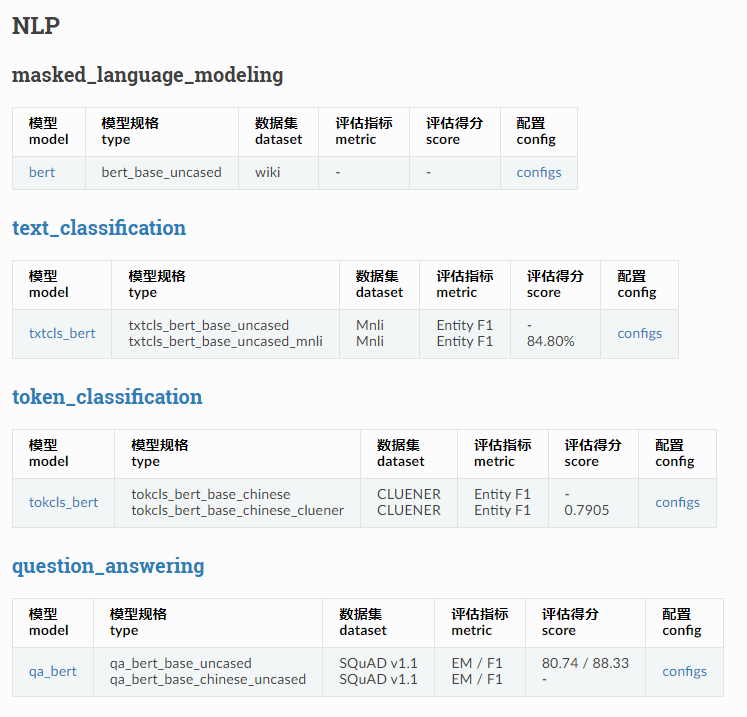

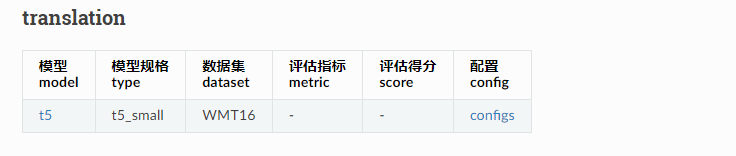

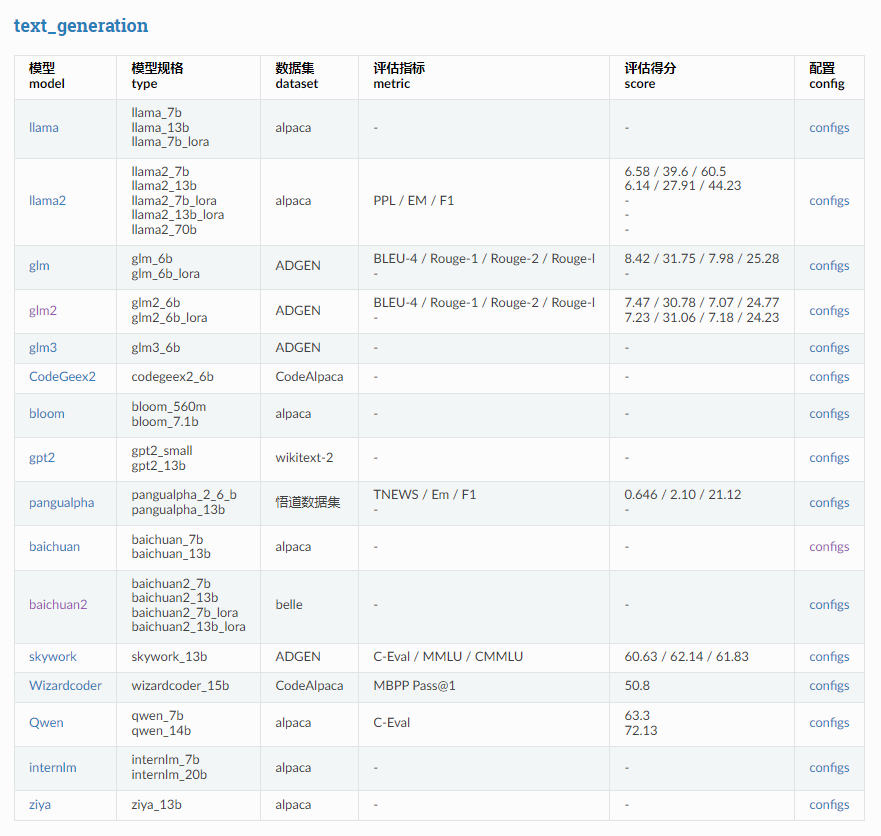

The list of currently supported models is as follows:

| model | model name |

|---|---|

| LLama2 | llama2_7b, llama2_13b, llama2_7b_lora, llama2_13b_lora, llama2_70b |

| GLM2 | glm2_6b, glm2_6b_lora |

| CodeGeex2 | codegeex2_6b |

| LLama | llama_7b, llama_13b, llama_7b_lora |

| GLM | glm_6b, glm_6b_lora |

| Bloom | bloom_560m, bloom_7.1b |

| GPT2 | gpt2, gpt2_13b |

| PanGuAlpha | pangualpha_2_6_b, pangualpha_13b |

| BLIP2 | blip2_stage1_vit_g |

| CLIP | clip_vit_b_32,clip_vit_b_16,clip_vit_l_14,clip_vit_l_14@336 |

| T5 | t5_small |

| sam | sam_vit_b, sam_vit_l, sam_vit_h |

| MAE | mae_vit_base_p16 |

| VIT | vit_base_p16 |

| Swin | swin_base_p4w7 |

| skywork | skywork_13b |

| Baichuan2 | baichuan2_7b,baichuan2_13b,baichuan2_7b_lora, baichuan2_13b_lora |

| Baichuan | baichuan_7b, baichuan_13b |

| Qwen | qwen_7b, qwen_14b, qwen_7b_lora, qwen_14b_lora |

| Wizardcoder | wizardcoder_15b |

| Internlm | internlm_7b,internlm_20b,internlm_7b_lora |

| ziya | ziya_13b |

| VisualGLM | visualglm |

Model Support List