Model Training

Path: Artificial Intelligence --- Code Development and Training --- Model Training

Function Overview

The system supports the creation of training tasks, including:

- Selecting a model from the model factory

- Setting hyperparameters

- Selecting a training dataset

- Providing resource specifications to run the training task

When starting a model training task, the system supports distributed training.

During the training process, users can:

- View task details, including the training task ID, training dataset, training logs, and other information

- Start a visualization task to display training visualization information through TensorBoard or MindInsight, and support the retrieval of task operation logs

- Utilize the experimental result comparison capabilities provided by the system

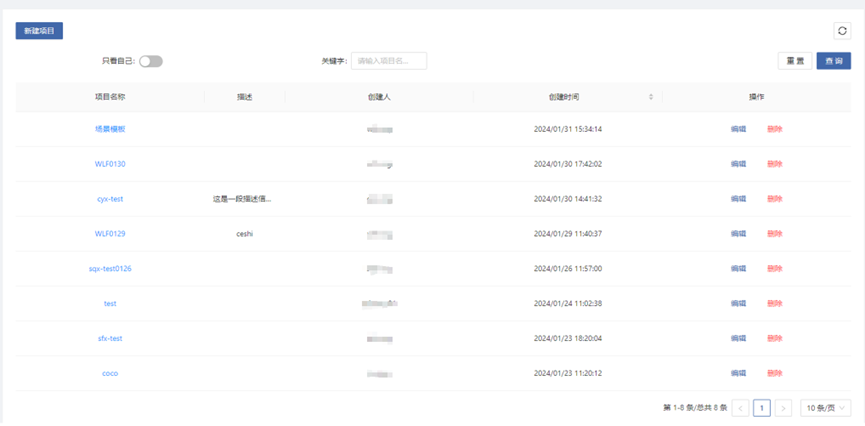

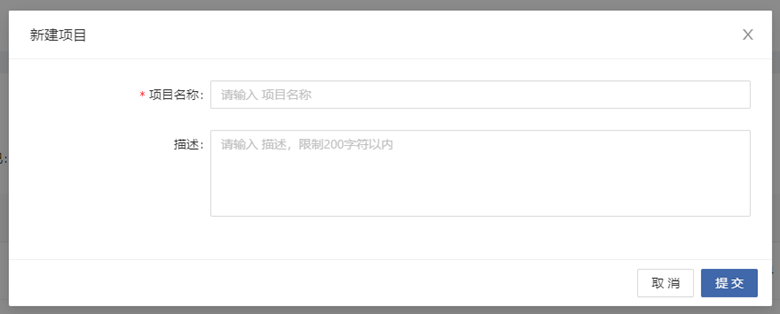

Create a Project

Before starting training and evaluation, a model training project needs to be created.

Train the Model

Create a Training Task

On the "Create Model Training" page, select a model from the model library and fill in the following parameters:

-

Model Name: Click the browse button and select the required model from the pop-up model list. After selection, the relevant information of this model will be automatically filled in, including tags, image, CPU architecture, conda environment, training visualization, and distributed training

-

Incremental Training: If the model supports incremental training, you can select the weight file of the output of an existing task as the pre-trained model for incremental training

-

Hyperparameter Configuration: You can use the default values for training or modify the parameter configuration as needed

-

Data Configuration: Select any dataset. If multiple datasets are selected, the algorithm code needs to adapt to them by itself

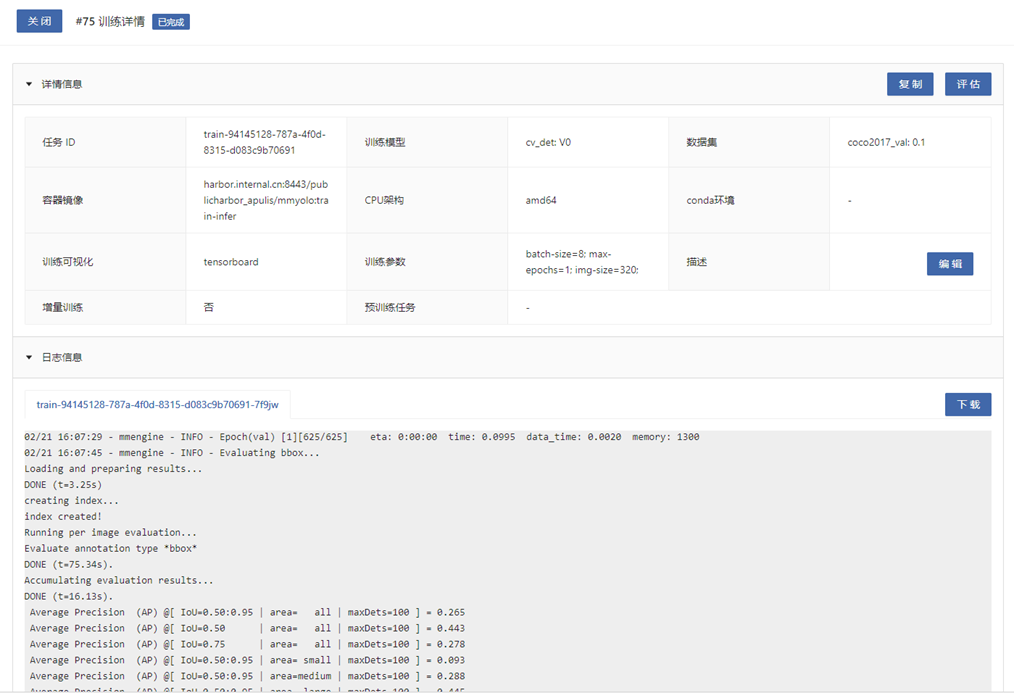

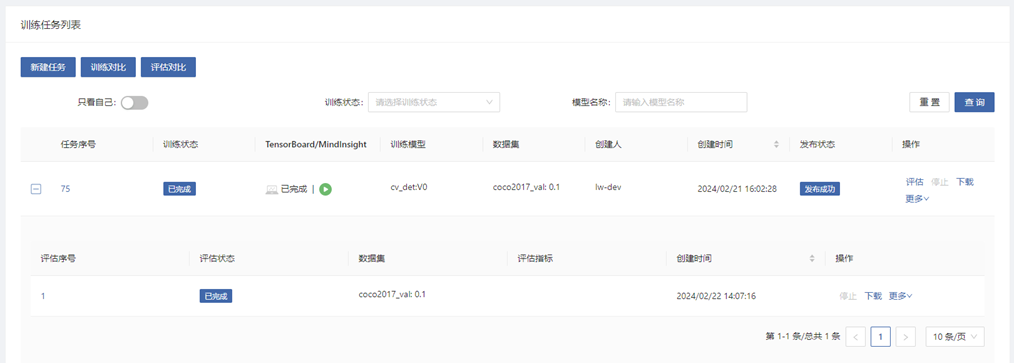

View Task Details

In the task list, click the task number of the task to view the training details, including the training log. Wait for the training status to change to "Completed", which means the model training is completed. After the training is finished, you can view and download the model files, including the weight file.

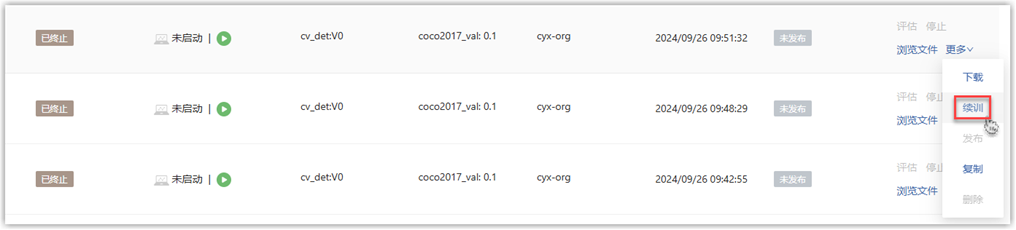

Resume Training from Checkpoint

For training tasks that are interrupted halfway, you can resume training from the latest checkpoint record. In the training task list, click the "More - Resume Training" button on the right side of the task, and the checkpoint resume training will start automatically.

It should be noted that not all models support resume training from checkpoint. The following conditions need to be met:

-

Output the model checkpoint data to the "Task Output Path" of the received platform parameters in the model code

-

Read the checkpoint data in this path during resume training

-

Turn on the "Resume Training from Checkpoint" switch in the model's training configuration

Evaluate the Model

For tasks that have completed model training, the model can be further evaluated.

Create an Evaluation Task

In the training list, click the "Evaluate" button on the right side of the training task to evaluate the model. Enter the "Create Model Evaluation" page and fill in the relevant parameters.

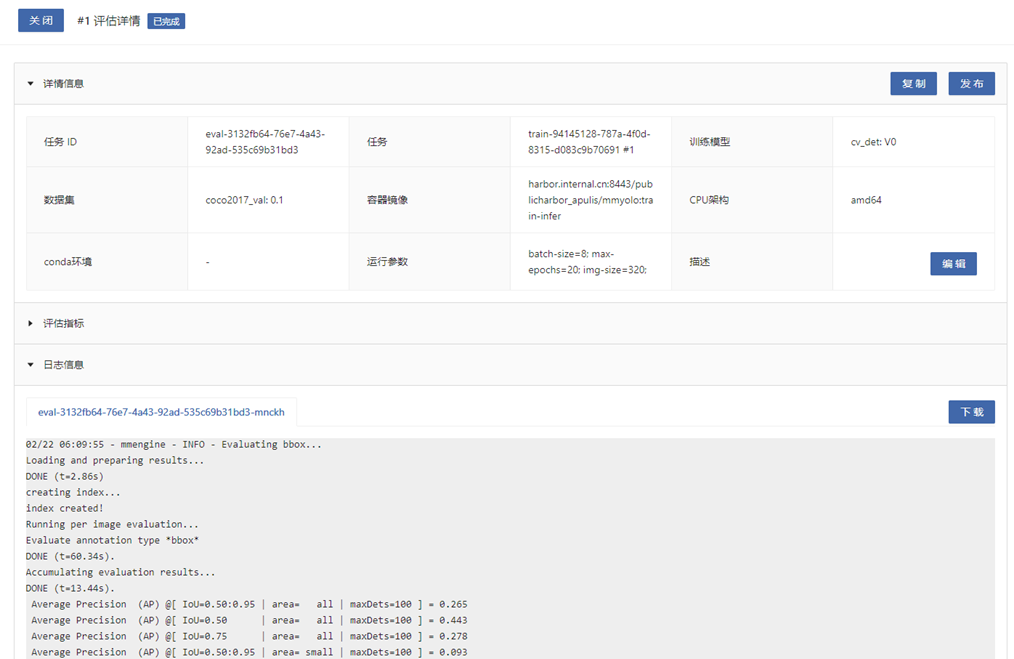

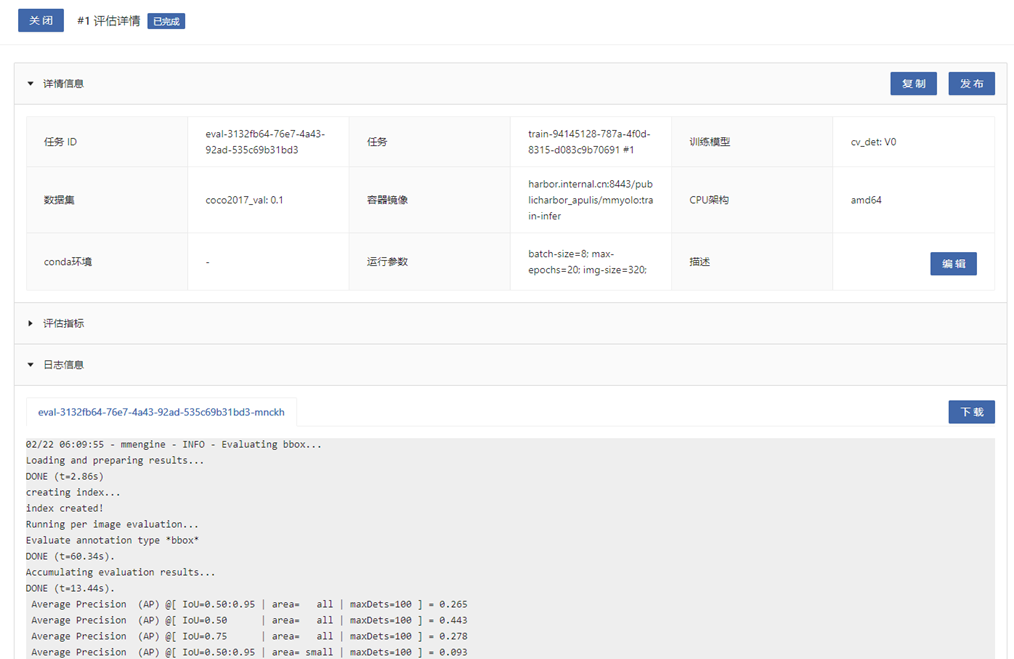

View Evaluation Task Details

Click the evaluation number to view the evaluation details, evaluation indicators, evaluation logs, and other information.

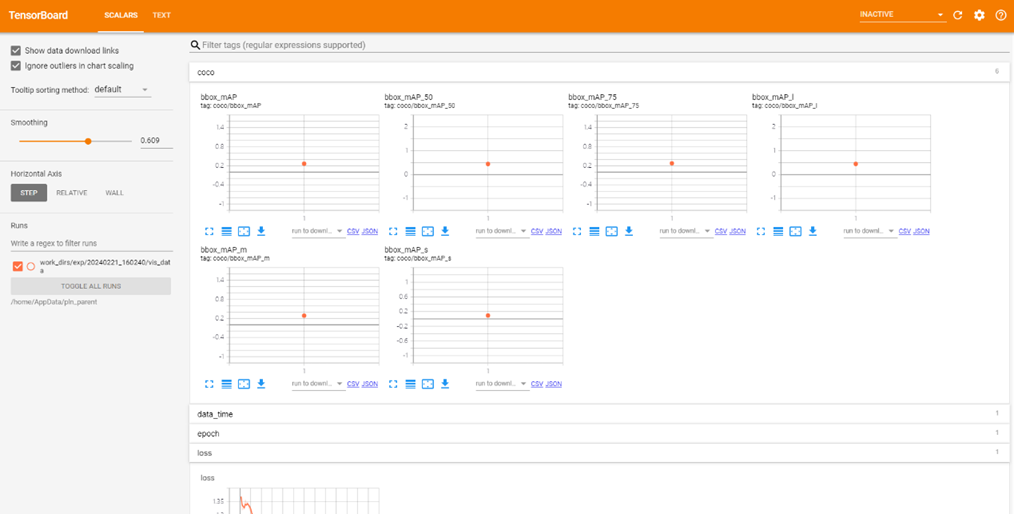

Training Visualization

Two ways of visualizing training tasks are supported: TensorBoard and MindInsight.

The following requirements need to be met for visualization:

-

The model algorithm needs to output the visualization content to the training task output path according to the visualization specifications of TensorBoard and MindInsight.

-

The model algorithm needs to receive the training task output path passed in the form of command-line parameters by the platform. And you need to edit the names of the command-line parameters received by the model algorithm in the training configuration of the model details in the model library.

-

When running the training task, click the start button in the "TensorBoard/MindInsight" column of the task list. After the status changes to running, click the open button to view the visualization content of TensorBoard or MindInsight in a new window.

Experimental Comparison

During the process, experimental comparisons can be made, including training comparisons after model training, evaluation comparisons, and debugging training comparisons after code development. It can compare the output indicators after multiple training and evaluation sessions and display the comparison results visually.

Refer to the official Aim documentation

Monitor Comparison Indicators

Before making a comparison, you need to specify the comparison indicators in the model's algorithm code and track the output. Here, use the experimental data visualization SDK provided by the platform to specify the training and evaluation indicators for comparison.

Install the SDK

After entering the development environment, first install aim in the command line through JupyterLab or SSH.

Initialize

Write the experimental script.

Import the aim package and create a Run object. A Run object usually corresponds to a model training or evaluation experiment.

from aim import Run

run = Run()

Declare Hyperparameters

Record the experimental parameters for easy subsequent observation and analysis.

run['hparams'] = {

'learning_rate': 0.001,

'batch_size': 32,

}

Record Indicators

At the appropriate place in the training or evaluation code, call track to record the indicators. value is the indicator value, and name is the indicator name.

run.track(value, name='name')

Complete Example

from aim import Run

run = Run()

run['hparams'] = {

'learning_rate': 0.001,

'batch_size': 32,

}

... # Model training or evaluation code

run.track(value1, name='name1')

... # Model training or evaluation code

run.track(value2, name='name2')

Training Comparison

Open a model training project and complete several training sessions.

-

Click the "Training Comparison" button on the project details page, and a pop-up window for selecting training tasks will appear.

-

Select the training tasks to be compared in the pop-up window, add them to the target list on the right side of the shuttle box, and click the "Compare" button.

-

On the training comparison page, you can view the comparison of indicators.

-

On the training comparison page, click "Visual Comparison" to view the visual comparison of indicators.

Evaluation Comparison

Open a model training project and complete several evaluation sessions.

-

Click the "Evaluation Comparison" button on the project details page, and a pop-up window for selecting evaluation tasks will appear.

-

Select the evaluation tasks to be compared in the pop-up window, add them to the target list on the right side of the shuttle box, and click the "Compare" button.

-

On the evaluation comparison page, you can view the comparison of indicators.

-

On the evaluation comparison page, click "Visual Comparison" to view the visual comparison of indicators.

Publish the Model

After model training or evaluation, the trained weight can be published as a new model or version.

On the project details page, for a task that has been completed, click the "More - Publish" button to enter the publishing page.

On the publishing page, fill in the following parameters:

-

Model Name: If the entered name is the same as an existing model, it will be published as a new version of the existing model; otherwise, it will be published as a new model.

-

Description: Fill in the description information of the model.

-

Tags: You can create new tags or select existing ones to facilitate the description and filtering of models.

-

Published Content: Select files from the training task output directory. They will be copied to the model directory of the new model package during publishing.

After filling in the information, click the publish button, and the model will be published to the model library according to the above information. In the training task list, you can see the status of this publication.

Configuration Update After Publishing

The training configuration, evaluation configuration, and inference configuration information of the newly published model are the same as those of the training model. If adjustments are needed, please go to the model details in the model library to update them.