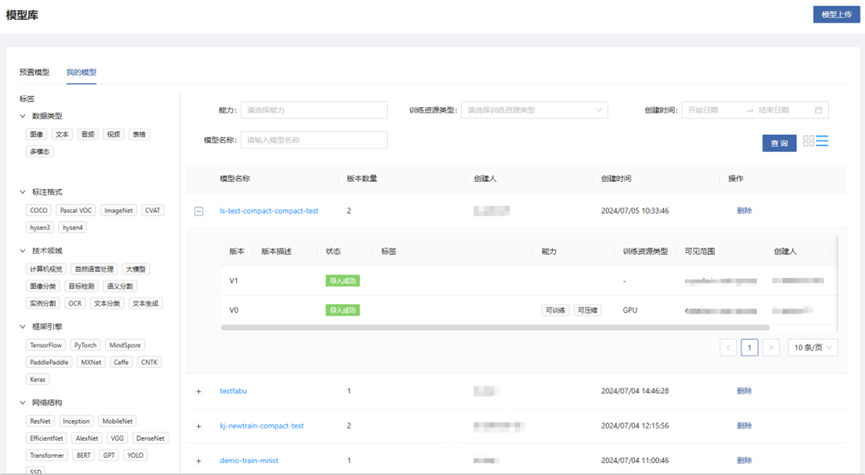

Model Repository

The model repository serves as the model management center, where all models generated from development or training tasks can be imported and centrally managed. It also supports the upload of local models. With features such as version control, and it comes preloaded with a rich collection of models to meet the needs of various application scenarios.

Path: Artificial Intelligence --- Code Development and Training --- Model Management

Model Upload

Users are supported to upload locally developed models to the model repository for unified management.

If the uploaded model is a user-defined model package (which can contain any files), the files within the model package will be stored in the model's algorithm directory after upload.

If the uploaded model package is obtained by clicking the "Download All" button in the model repository (containing code, model folders and a manifest file), the contents of the code and model folders will be stored in the model's algorithm directory and model directory respectively after upload.

Model Details

Training Configuration

After turning on the training configuration switch, the model's capability supports "trainable", and then this model version can be used for model training.

The page displays the configuration information used for model training, including startup environment configuration, received platform parameters, hyperparameters, recommended resource configuration, visualization settings, and distributed training options.

Startup Environment Configuration

Image: The image used when starting the task.

CPU Architecture: The CPU architecture used by the image and resources.

Use Conda Environment: Whether to use the Conda environment for training. It should be noted that if the Conda environment is used, do not delete or modify this environment casually, otherwise it may cause the task to fail.

Startup Entry: The startup entry within the image. If the startup script is a .py file, the startup entry can be set as python; if the startup script is a .sh file, the startup entry can be set as bash.

Startup Script: Select the startup script file from the algorithm directory of the model version. Only .py or .sh formats are supported.

Startup Command Preview: You can preview the complete startup command.

Received Platform Parameters

When starting a model training task, the platform will dynamically generate these parameter contents and pass them to the startup script in the form of command-line parameters. Here, you need to fill in the names of the corresponding command-line parameters in the startup script.

Dataset Path: The mounting path of the model training dataset.

Incremental Training Weight Path: The mounting path of the incremental training weight file.

Task Output Path: The output path of the model training task. The platform will allocate a unique persistent output path. Please store the data that needs to be saved in the code to this path.

Hyperparameters

The hyperparameters for model training are passed to the startup script in the form of command-line parameters.

Recommended Resource Configuration

Resource Type: The type of resources used for model training.

CPU, GPU, NPU: The recommended resource specifications for model training.

Training Visualization

Select the supported visualization type. Please store the visualization output in the "Task Output Path" of the received platform parameters in the code, and the platform will automatically read it.

Distributed Training

If the code supports distributed training, you can select this option.

Evaluation Configuration

After turning on the evaluation configuration switch, the model's capability supports "evaluable", and then this model version can be used for model evaluation.

The page displays the configuration information used for model evaluation, including startup environment configuration, received platform parameters, hyperparameters, and recommended resource configuration.

Received Platform Parameters:

Dataset Path: The mounting path of the model evaluation dataset.

Model Weight Path: The mounting path of the weight file generated by model training.

Task Output Path: The output path of the model evaluation task. The platform will allocate a unique persistent output path. Please store the data that needs to be saved in the code to this path.

Inference Configuration

After turning on the inference configuration switch, the model's capability supports "inferable", and then this model version can be used for model inference.

The page displays the configuration information used for model inference, including startup environment configuration, received platform parameters, inference parameters, and recommended resource configuration.

Received Platform Parameters:

Model Weight Path: The mounting path of the model directory in the model package

Inference Service Listening Port

The port on which the inference service framework listens for HTTP inference requests

Inference Service Access Endpoint

The access endpoint where the inference service framework processes HTTP inference requests

Inference Data Type

Select the data type input to the inference model. The platform uses different methods to visualize the original inference data and inference results: